To wild: to make wild. To see and hear the wild underneath the tame, to know it is there and embrace it fully, pull it out from under, with love.

I am honoured and grateful to be part of a cohort of artists and thinkers in the research cohort Wilding AI. Over the last few years, we have met in different locales – online and around the world – to think about what it means to work and create in this so-called algorithmic moment.

We all have (as collectives do) many differing ideas and interests (I’ve written about our residency at Fiber Festival in Amsterdam earlier this year in a blog post here). We recently finished a residency at Université de Montreal’s Laboratoire formes · ondes, and presented a collection of works at a sold-out show at SAT Montreal.

This project was a rubber-meets-the-road of our many discussions, Google Meets, emails, scholarly articles, residencies, dancing, meals, hugs over the last few years. It was the first time we all sat down to make something that we could share with others.

The process of discussion between us, and the many open labs we have hosted around the world has been an incredible watering of the soil. We’ve wrestled with software, with plugins, githubs, jet lag (so much jet lag), Google Colab and Jupyter notebooks, microphones, the world on fire, speakers, guitars and drumsticks, our own bodies and voices in the moment of making. We’ve all approached what it means to make with tools that are (supposedly) efficient, that prioritize efficiency and automation and want us to see and use them in that way.

Some of us did that, some of us didn’t (or maybe it would be more accurate to say we all did to varying degrees).

But what we also did was to invite ourselves and each other to be wild: to ask ourselves what is down deep, what is wild in our own minds, hands, bodies, ears, hearts, and to allow that wildness to sing in our making with the machine. Each song was different; each song was wild. And the discussions we had among ourselves and with others was wild too.

Every moment we talked with others about being wild in this machine space – about rejecting some part of the workflow, or listening to the composition and what it needed despite/beyond/outside the math, to hear the algorithm break down and embrace the possibility of that endpoint – I saw it land with people. I saw it dawn on them that there is room, that the machine imperative can be lived with instead of lived under. I saw it land that they can listen to their bodies and their ancestors, that they can be slow, they can be human and live in the gap between machine math and the mess and wildness of what it means to be on this earth, together.

And that is what we, WAI, did in Montreal – we were together, on this earth, listening, sharing and being wild.

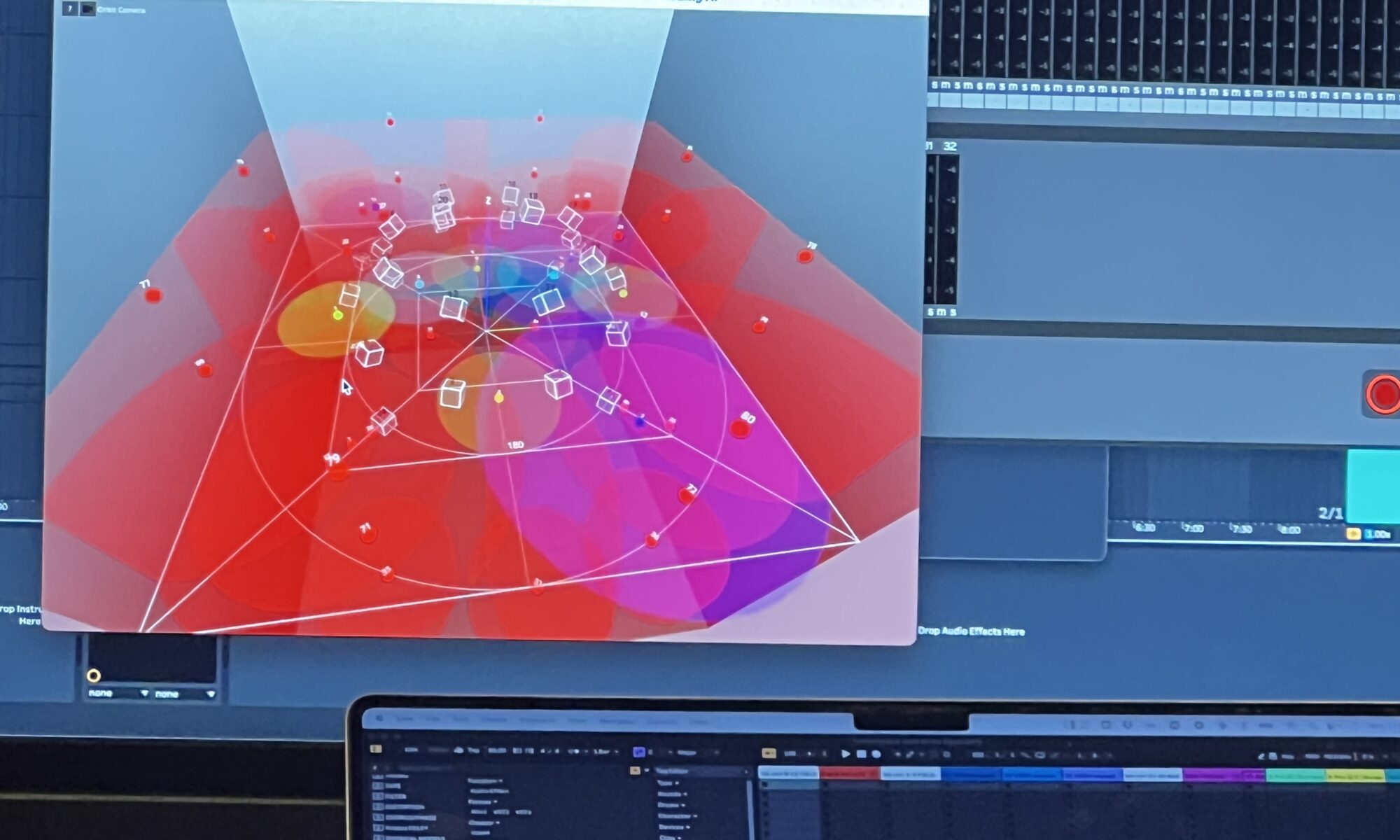

(the image for this post comes from SpatGRIS, the spatialization software used at the Laboratoire. More info here).